Instagram Expands AI Age Verification to Better Protect Teens Online

Instagram is stepping up its efforts to ensure that teens are using the platform with proper safety measures in place. The company is expanding its AI-powered age verification system and introducing new parental notifications aimed at improving awareness around online risks for younger users.

These moves come amid growing calls to raise the minimum age for social media access, putting Instagram’s latest updates in the spotlight as a potential safeguard—and perhaps a preemptive move against stricter regulations.

How Instagram’s AI Age-Checking Works

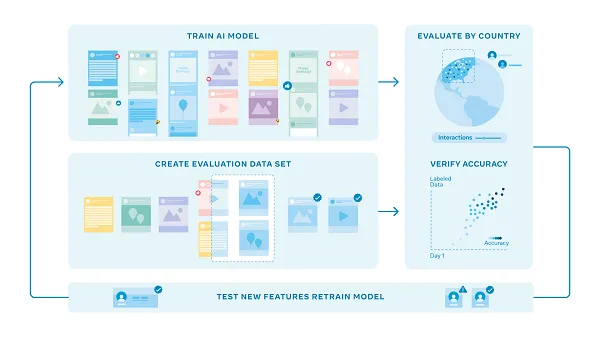

Meta, Instagram’s parent company, has been developing its age-checking capabilities over the past few years. The updated AI model is trained to detect users who may have falsified their age when signing up—particularly those claiming to be adults while actually being teens.

According to Meta:

“To develop our adult classifier, we first train an AI model on signals such as profile information, like when a person’s account was created and interactions with other profiles and content. For example, people in the same age group tend to interact similarly with certain types of content.”

In other words, the system looks at behavioral patterns: who users follow, what content they engage with, how long they spend watching certain types of posts, and how they interact across the platform. These patterns—combined with profile metadata and regional behavior trends—help the AI system estimate whether an account belongs to a teen or an adult.

Pattern Recognition, Not “Intelligence”

This isn’t AI in the science-fiction sense. The system doesn’t “think” independently. Instead, it’s using pattern recognition—drawing from massive datasets and comparing a user’s behavior to known patterns across age groups. This is one area where AI excels, and Meta appears confident that it can detect age discrepancies with increasing accuracy.

If successful, this expanded system could automatically place suspected teens into Teen Accounts, which come with built-in safety restrictions, limited DMs, and parental controls.

More Tools for Parents

Alongside the AI expansion, Instagram is also rolling out notifications for parents. These alerts will include guidance on how to talk with their teens about online safety and encourage them to input accurate birthdates, which is key to activating the platform’s safety features.

Why This Matters

With U.S. lawmakers actively debating new rules to raise the minimum social media age to 16 or higher, platforms like Instagram are under pressure to prove they can responsibly manage teen access. This AI-driven system may be Meta’s way of avoiding more sweeping regulation by showing it can self-regulate.

But the results will matter. If Meta’s system can’t reliably detect underage users, stricter, government-mandated age checks could be next.

Global Pressure Is Mounting

The timing of Instagram’s expanded AI age verification is no coincidence. Governments and regulators in countries including Australia, Denmark, the U.S., and the U.K. are actively exploring stricter age limits for social media access—some proposing the age be raised to 16 or even 18. These discussions have serious implications: either social platforms like Instagram will need to proactively restrict access, or they’ll risk penalties, legal action, or outright bans for noncompliance.

This puts even more weight on Meta’s ability to accurately and consistently detect underage users. If it fails, the company could be held accountable for allowing minors to access platforms designed for older audiences.

So while AI age verification might seem like a backend improvement, it’s shaping up to be a frontline defense against looming global regulation.

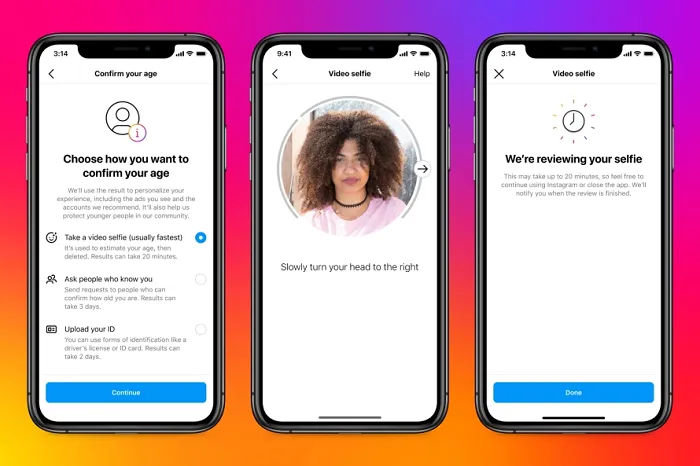

Testing Video Selfie Verification with Yoti

In parallel with its AI-based behavioral modeling, Instagram is also experimenting with video selfie-based age verification, in collaboration with Yoti, a digital identity provider. This system—already live in some regions—analyzes facial features through a short video to estimate a user’s age. It doesn’t rely on ID uploads or date of birth entry, and Meta claims it doesn’t store facial data.

This approach adds an additional layer of verification, especially for users flagged by the AI model. It may also provide a more direct and privacy-conscious way to confirm a user’s age without collecting sensitive documents.

Meta’s Push for Industry-Wide Solutions

While Instagram continues refining its own in-app age verification systems, the company is also reiterating its long-standing position: age-checking should start at the app store level.

“Understanding the age of people online is an industry-wide challenge,” Meta said in a statement. “We’ll continue our efforts to help ensure teens are placed in age-appropriate online experiences, like Teen Accounts, but the most effective way to understand age is by obtaining parental approval and verifying age on the app store.”

Meta has been lobbying for this shift for some time—and it may be starting to gain traction with U.S. lawmakers. The idea: if app stores like Apple’s App Store and Google Play become the gatekeepers of age verification, platforms like Instagram won’t have to shoulder the full burden of identifying underage users.

Apple has already begun adjusting app age categories in a broader push to improve app qualification, though the company has so far resisted calls to enforce age restrictions directly. The debate is ongoing.

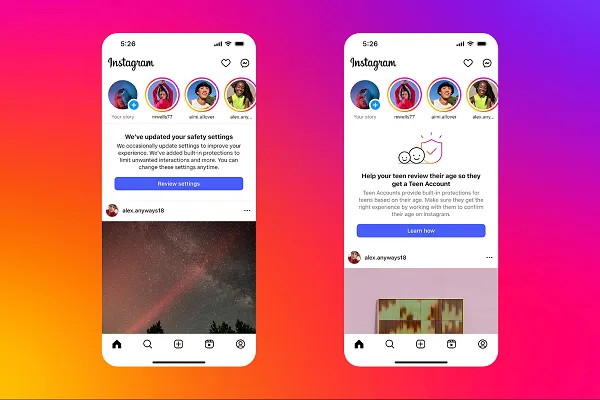

New Prompts for Parents

In addition to behind-the-scenes verification improvements, Instagram will soon roll out new alerts for parents, highlighting teen safety tools and offering guidance on how to talk to their kids about online risks.

These prompts are designed to make it easier for parents to take an active role in managing their teen’s digital experience—particularly if they weren’t aware that features like Teen Accounts, content controls, and supervised accounts already exist.

As global scrutiny over teen social media use intensifies, Instagram’s strategy seems focused on two parallel paths: tightening internal safeguards while also pushing for shared accountability across the broader app ecosystem.

Whether that approach will be enough to satisfy regulators—or to avoid more sweeping restrictions—remains to be seen.

These new prompts aim to keep teen safety top of mind for parents and encourage more frequent check-ins with their kids. It’s part of Instagram’s broader effort to get more teens using its age-appropriate Teen Account experience, which launched last year.

According to Instagram, the initiative is working. The company says over 54 million teens have been enrolled in Teen Accounts globally, with 97% of users aged 13–15 choosing to stay within the protected experience. Instagram also recently expanded Teen Accounts to Facebook and Messenger.

Meta says early feedback has been positive:

“Parents and teens alike are telling us that they are happy with this new, reimagined experience. And although over 90% of parents surveyed say the new Teen Account protections are helpful in supporting their teens on Instagram, we’re continuing to listen to parents who are concerned about how overwhelming the internet can be when it comes to making sure teens have age-appropriate experiences.”

With regulators around the world pushing for tighter rules and penalties on underage access, Meta is under pressure to prove that it can handle this internally. These updates are part of that effort—and the company will likely be adding more.

Leave a Reply